New research from the University of Virginia School of Law, which is a partner in the Center for Statistics and Applications in Forensics Evidence, or CSAFE, suggests that jurors have good reason to question what they're told, and what they think they know, about scientific evidence.

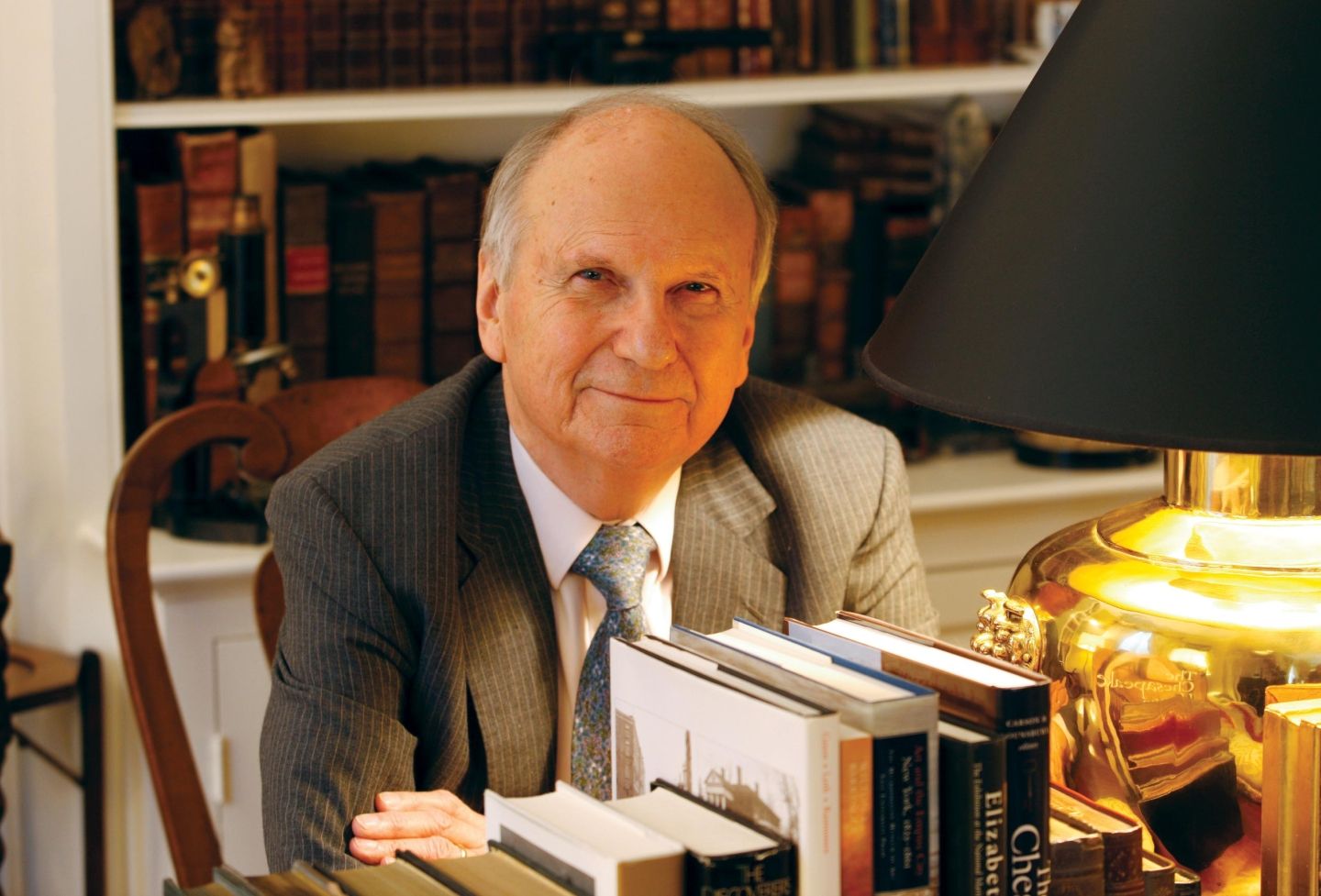

Professors Brandon Garrett, a principal investigator for the Law School’s CSAFE projects, and Greg Mitchell have co-authored a series of new papers on the problem of how jurors and others think about forensics, including a forthcoming article casting doubt on claims of expert proficiency.

"We have a concern that judges and policymakers are focused on the wrong questions," Garrett said. "They're focused on: How do you explain the ultimate conclusion the forensic analyst reaches? We think they need to focus more on error rates and proficiency. It may not matter how the analyst phrases the conclusion; once the jury hears it is a match, they think it's a match."

In their article forthcoming in the University of Pennsylvania Law Review, "The Proficiency of Experts," Garrett and Mitchell examine two decades' worth of fingerprint data, for which a high variability in error rates was discovered. The research serves as a contrast to the high degree of reliability often claimed by forensics practitioners.

Federal judges, under Federal Rule of Evidence 702 and the precedent-setting U.S. Supreme Court case Daubert v. Merrell Dow Pharmaceuticals, "must consider the 'known or potential rate of error' for an expert’s technique," the paper states. "Judges, however, often do not reach the question [of] whether the particular expert or laboratory has a 'known or potential rate of error.'"

The paper argues "both state and federal courts fail to carefully consider proficiency."

The professors' article jibes with a White House Presidential Council for Advisers on Science and Technology report issued in September that urged the legal system to reconsider its standards for admitting forensics — including some widely used methods of analysis that may strain scientific certainty.

The report also underscored the importance of sound testing methods to assess “an examiner’s capability and performance in making accurate judgments.”

Mitchell and Garrett said that assessments of lab analysts often feature scenarios that are too simplistic and don't reflect real casework. As a result, test administrators may issue high marks, allowing some experts to claim their work is 100 percent reliable.

The professors recommend that lawmakers mandate rigorous proficiency testing, including the use of "blind" methods, and require that the results be shared publicly.

In a second paper, "Forensics and Fallibility: Comparing the Views of Lawyers and Jurors," published in the West Virginia Law Review, the professors examine how the preconceptions of jurors and lawyers may affect interpretations of scientific validity.

Garrett and Mitchell found that jury-eligible adults placed considerable weight on fingerprint evidence, despite problems that can occur, such as false-positive or false-negative matches (the latter ruling out a potentially matching subject), or samples that are inconclusive but are presented as otherwise.

Potential jurors also tended to heavily embrace DNA evidence, while attorneys — in particular defense attorneys — viewed forensics with greater skepticism.

The professors' third and most recent paper, “The Impact of Proficiency Testing Information on the Weight Given to Fingerprint Evidence,” surveyed more than 1,400 laypeople to examine how jurors might assess evidence of a fingerprint examiner’s proficiency.

Mitchell and Garrett learned that laypeople assume that fingerprint examiners are proficient, but not perfect. Jurors were responsive to information about proficiency; they weighted the more proficient examiners higher than the less proficient examiners.

"The study suggests that proficiency information is highly informative to jurors and that they incorporate that information carefully into their conclusions when weighing evidence in a criminal case," Garrett said.

Future studies by Garrett and Mitchell will further explore how jurors evaluate forensic evidence, including new types of quantitative evidence.

CSAFE was established by the U.S. Commerce Department’s National Institute of Standards and Technology as a Science Center of Excellence. The center, based at Iowa State University and coordinated at UVA by Karen Kafadar, chair of the UVA Department of Statistics, began its local efforts two years ago.

The center focuses on the statistical analysis of pattern and digital evidence, but also delves into the end use of forensics in the justice system.

Related News

3.28.16 Professor Greg Mitchell's New Book Demystifies U.S. Court System

10.05.15 UVA Law Launches Effort to Improve Forensics Analysis as Part of New National Center

1.22.15 Professor Brandon Garrett's Work Cited in Overhaul of Massachusetts Jury Instructions on Eyewitness Evidence

Founded in 1819, the University of Virginia School of Law is the second-oldest continuously operating law school in the nation. Consistently ranked among the top law schools, Virginia is a world-renowned training ground for distinguished lawyers and public servants, instilling in them a commitment to leadership, integrity and community service.